During a recent project we were required to build a “Logging Forensics Platform”, which is in essence a logging platform that can consume data from a variety of sources such as windows event logs, syslog, flat files and databases. The platform would then be used for queries during forensic investigations and to help follow up on Indicators of Compromise [IoC]. The amount of data generated is quite large, ranging into terabytes of logs and events. This seemed right up elasticsearch’s alley, and the more we use the system, the more adept at this sort of use case it turns out to be. This article presents some configuration scripts and research links that were used to provision the system and some tips and tricks learned during implementation and R&D of the system

Helpful Reading

The ELK stack is proving to be a very popular suite of tools, and good documentation abounds on the internet. The official documentation is extremely helpful and is a must read before starting anything. There are some additional links which are most definitely useful when using ELK for logging:

http://edgeofsanity.net/article/2012/12/26/elasticsearch-for-logging.html

General Architecture

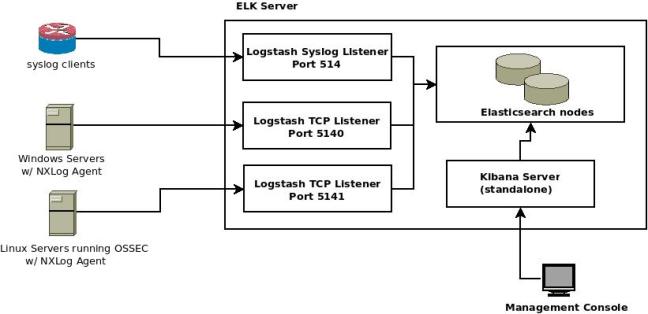

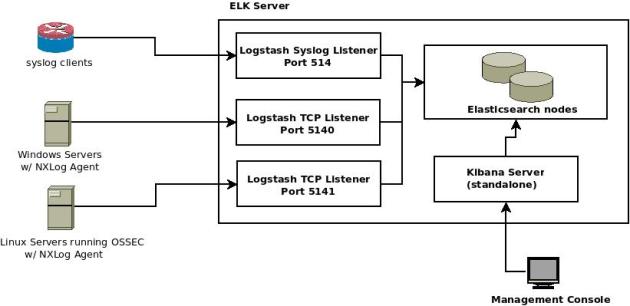

One of the main advantage of ELK is it’s flexibility. There are multiple ways to achieve the desired result, so the rest of this blog post must be taken in context and adapted to your own environment where appropriate. To give some context to the configuration files which follow, below is the high level architecture which was implemented:

There were a couple of design considerations that led to the above architecture:

1. In this particular environment, there were major discussions around reliability vs performance during log transport. As most of you would know, this roughly would translate to a discussion around TCP vs UDP transport. UDP is a lot faster since there’s less overhead, however that same overhead allows TCP to be a lot more reliable and prevent loss of events/logs when there is a disconnection or problem. The resulting architecture uses both, with the reasoning being that most syslog clients are network nodes like routers, switches and firewalls, which are very verbose and hence performance is more of an issue. However, on the actual servers, we opted for TCP to ensure absolutely no events are lost, and the servers do in fact tend to be less verbose so the reliability gains are worth it for high value assets such as domain controllers.

2. The logstash defaults of creating a separate daily index in elasticsearch are actually the most sane settings we found, especially for backup and performance purposes, so we didnt change these

3. One of the nicest features of elasticsearch are the analyzers [1], which allow you to do “fuzzy searches” and return results with a “relevance score” [2]. However, when running queries against log data, this can actually be a drawback since log messages more often than not are very similar to each other, so keeping the analyzers on returned too many results and made forensic analysis needlessly difficult. The analyzers were therefore switched off, as can be seen in the below configuration files. Add to this a slight performance bonus for switching off analyzers and the solution was a very good fit.

NXLog Windows Configuration:

We ran across an issue when installing the NXLog client on windows servers. After restarting the NXLog service we would see an error in the logs along the lines of:

apr_sockaddr_info_get() failed

I never figured out the root cause of this error, even inserting the appropriate hostnames and DNS entries did not help. However using the below configuration and re-installing the client got rid of the error.

| ## This is a sample configuration file. See the nxlog reference manual about the | |

| ## configuration options. It should be installed locally and is also available | |

| ## online at http://nxlog.org/nxlog-docs/en/nxlog-reference-manual.html | |

| ## Please set the ROOT to the folder your nxlog was installed into, | |

| ## otherwise it will not start. | |

| #define ROOT C:\Program Files\nxlog | |

| define ROOT C:\Program Files (x86)\nxlog | |

| Moduledir %ROOT%\modules | |

| CacheDir %ROOT%\data | |

| Pidfile %ROOT%\data\nxlog.pid | |

| SpoolDir %ROOT%\data | |

| LogFile %ROOT%\data\nxlog.log | |

| <Extension _syslog> | |

| Module xm_syslog | |

| </Extension> | |

| <Extension json> | |

| Module xm_json | |

| </Extension> | |

| <Input in> | |

| Module im_msvistalog | |

| Exec to_json(); | |

| Query <QueryList>\ | |

| <Query Id="0">\ | |

| <Select Path="Microsoft-Windows-TaskScheduler/Operational">*</Select>\ | |

| </Query>\ | |

| <Query Id="1">\ | |

| <Select Path="Application">*</Select>\ | |

| </Query>\ | |

| <Query Id="2">\ | |

| <Select Path="Security">*</Select>\ | |

| </Query>\ | |

| <Query Id="3">\ | |

| <Select Path="System">*</Select>\ | |

| </Query>\ | |

| </QueryList> | |

| </Input> | |

| <Input DNS_LOGS> | |

| Module im_file | |

| File "C:\\DNSLogs\dns.txt"' | |

| InputType LineBased | |

| Exec $Message = $raw_event; | |

| Exec to_json(); | |

| SavePos TRUE | |

| </Input> | |

| <Output out> | |

| Module om_tcp | |

| Port 5140 | |

| Host 192.168.10.175 | |

| </Output> | |

| <Output out_debug> | |

| Module om_file | |

| File "C:\\nxlog_debug.log" | |

| </Output> | |

| <Route 1> | |

| Path in => out | |

| </Route> | |

| <Route 2> | |

| #Path DNS_LOGS => out_debug | |

| Path DNS_LOGS => out | |

| </Route> |

One point of interest in the above configuration is on line 30. By default NXLog will monitor the Application, Security, and System event logs. In this configuration sample one can see an example of also monitoring the post-2003 style event log “containers” where windows now stores application specific logs that are useful to monitor.

Also note the use of the to_json module, which converts the messages to JSON format. We will use this later when configuring logstash.

NXLog installation instructions on alienvault:

One of the requirements of the environment where this forensic logging platform was installed, is to integrate with AlienVault Enterprise appliance to be able to send logs from these systems to the elasticsearch nodes. Here are the steps that worked for us:

1. Installation did not work via pre-built binaries. Luckily, building from source is very easy. Download the tar.gz source package from NXLog community site here

2. Before proceeding, install dependencies and pre-requisites:

apt-get update apt-get install build-essential libapr1-dev libpcre3-dev libssl-dev libexpat1-dev

3. Extract the TAR.GZ file, and change directory into the extracted tar.gz folder and run the usual configure, make, install:

./configure make make install

NXLog configuration (Linux):

The rest of the configuration for AlienVault servers is the same as a generic Linux host, with the exception that in the below config file we monitor OSSEC logs, which you may need to change depending on what you would like to monitor

| ## This is a sample configuration file. See the nxlog reference manual about the | |

| ## configuration options. It should be installed locally and is also available | |

| ## online at http://nxlog.org/nxlog-docs/en/nxlog-reference-manual.html | |

| ## Please set the ROOT to the folder your nxlog was installed into, | |

| ## otherwise it will not start. | |

| define ROOT /nxlog | |

| #define ROOT C:\Program Files (x86)\nxlog | |

| Moduledir /usr/local/libexec/nxlog/modules | |

| CacheDir %ROOT%/data | |

| Pidfile %ROOT%/data/nxlog.pid | |

| SpoolDir %ROOT%/data | |

| LogFile %ROOT%/data/nxlog.log | |

| <Extension _syslog> | |

| Module xm_syslog | |

| </Extension> | |

| <Extension json> | |

| Module xm_json | |

| </Extension> | |

| <Input in> | |

| Module im_file | |

| File '/var/ossec/logs/archives/archives.log' | |

| SavePos TRUE | |

| ReadFromLast TRUE | |

| PollInterval 1 | |

| Exec $Message = $raw_event; | |

| # Exec to_json(); | |

| </Input> | |

| <Output out> | |

| Module om_tcp | |

| Port 5141 | |

| Host 192.168.10.175 | |

| </Output> | |

| <Route 1> | |

| Path in => out | |

| </Route> |

Logstash configuration on elasticsearch:

This leaves us with the logstash configuration necessary to receive and parse these events. As noted above, we first need to switch off the elasticsearch analyzers. There are a couple of ways to do this, the easiest way we found was to modify the index template [3] that logstash uses and switch of analyzers from there. This is very simple to do:

– First, change default template to remove analysis of text/string fields:

vim ~/logstash-1.4.2/lib/logstash/outputs/elasticsearch/elasticsearch-template.json

– Change the default “string” mapping to not_analysed (line 14 in the default configuration file in v1.4.2)

analyzed –> not_analyzed

– Point logstash configuration to the new template (see line 121 in the logstash sample configuration below)

– If need be, delete any existing logstash indices / Restart logstash

| input { | |

| tcp { | |

| port => 5140 | |

| type => "windows-events" | |

| codec => json { | |

| charset => "CP1252" | |

| } | |

| } | |

| tcp { | |

| port => 5141 | |

| type => "ossec-events" | |

| codec => json { | |

| charset => "CP1252" | |

| } | |

| } | |

| syslog { | |

| type => "syslog" | |

| } | |

| } | |

| filter { | |

| mutate { | |

| add_field => { "Sender_IP" => "%{host}" } | |

| } | |

| if [type] == "windows-events" { | |

| if [Message] =~ "My first log .*"{ | |

| grok { | |

| match => [ "Message", 'My first log %{GREEDYDATA:custom_message}' ] | |

| add_tag => ["grokked"] | |

| } | |

| } | |

| date { | |

| match => ["[EventTime]", "YYYY-MM-dd HH:mm:ss"] | |

| } | |

| } | |

| if [type] == "ossec-events" { | |

| grok { | |

| add_tag => ["AlienVault"] | |

| } | |

| } | |

| } | |

| output { | |

| stdout { } | |

| elasticsearch { | |

| host => "localhost" | |

| template => "/callHome/logstash-1.4.2/lib/logstash/outputs/elasticsearch/elasticsearch-template.json" | |

| template_overwrite => true | |

| } | |

| } |

Also note lines 27-32 in the above config file. This has to do with the fact that we are converting messages into JSON format in the NXLog client. The logstash documentation [4] states that:

For nxlog users, you’ll want to set this to “CP1252”.

In a future article we’ll go into a bit more depth into the above logstash configuration, and how we can use it to parse messages into meaningful data

References:

[1] Elasticsearch Analyzers: http://www.elastic.co/guide/en/elasticsearch/reference/1.4/indices-analyze.html

[2] Elasticsearch relevance: http://www.elastic.co/guide/en/elasticsearch/guide/master/controlling-relevance.html

[3] Elasticsearch Index Templates: http://www.elastic.co/guide/en/elasticsearch/reference/1.x/indices-templates.html

[4] Logstash JSON documentation: http://logstash.net/docs/1.4.2/codecs/json

3 thoughts on “Building a Logging Forensics Platform using ELK (Elasticsearch, Logstash, Kibana)”

Comments are closed.